Streamlining Salesforce Data Upload with SFDX in Git and Jenkins Workflow

Introduction:

In Salesforce development, deploying components from one org to another is a common practice. However, the standard deployment process often overlooks the need for data uploads or data synchronization between orgs. This blog post presents a robust solution that seamlessly integrates data loading into your deployment pipeline using Git, Jenkins, and the Salesforce CLI (SFDX) command. By automating data upserts, you can reduce manual effort, enhance deployment efficiency, and ensure consistent data across orgs.

Prerequisites :

Before implementing the data upload solution, make sure you have the following prerequisites in place:

## 1.Understanding Salesforce Data Bulk Upsert and SFDX:

Salesforce Data Bulk Upsert is a mechanism for inserting or updating records in Salesforce based on a unique identifier field. It is particularly useful when working with large datasets and allows you to efficiently perform operations on thousands or even millions of records. SFDX (Salesforce CLI) is a command-line interface tool that provides a unified experience for managing Salesforce applications and metadata.

## 2.Setting Up Git and Jenkins for Salesforce Data Bulk Upsert:

Before diving into the data bulk upsert process, ensure that you have set up Git and Jenkins for your Salesforce project. This includes creating a Github repository to host your project and configuring Jenkins to automate various deployment tasks.

## 3.Install Salesforce CLI on Jenkins Server:

Install Salesforce CLI on the machine where Jenkins is running. The installation instructions vary depending on the operating system of the server. You can find the official installation guide on the Salesforce CLI documentation page.

## 4.Preparing the Data for Bulk Upsert:

To perform a data bulk upsert in Salesforce, you need to prepare the data in a suitable format. SFDX supports CSV (Comma-Separated Values) files for data import. Create a CSV file containing the records you want to insert or update, ensuring that it includes a unique identifier field that matches an existing field in your Salesforce object.

## 5.Configuring the SFDX Data Bulk Upsert Command:

The SFDX CLI provides a data command that enables you to perform bulk data operations. To configure the data bulk upsert command, follow these steps:

1.Open a command prompt or terminal and navigate to your Salesforce project directory.

2.Authenticate with your Salesforce org using the SFDX CLI.

3.Use the following command to perform the data bulk upsert:

sfdx force:data:bulk:upsert -s <ObjectAPIName> -f <CSVFilePath> -i <ExternalIdFieldAPIName> **

Replace <ObjectAPIName> with the API name of the Salesforce object,

<CSVFilePath> with the path to your CSV file,

<ExternalIdFieldAPIName> with the API name of the unique identifier field.

Integrating SFDX Data Bulk Upsert with Git and Jenkins:

Now that you have configured the SFDX data bulk upsert command, it’s time to integrate it into your Git and Jenkins workflow:

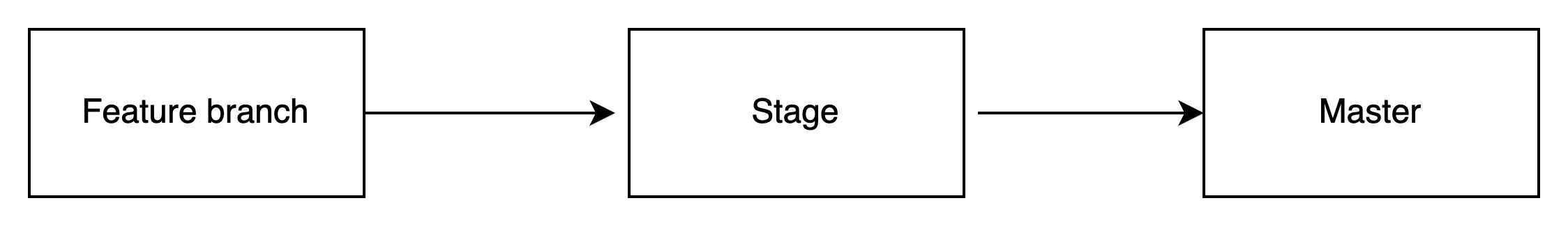

Lets us consider the workflow as shown below,

where stage branch is authenticated with Salesforce stage org,

master branch with Salesforce production and

feature branch is created and merged with stage and then to the Master with every data upload / deployment task.

## 1. Update the Jenkile file:

On your Master and Stage branch,Navigate to your Jenkins file and include a build step to execute the SFDX data bulk upsert command.

stage('Data Push to stage') {

when { expression { return env.BRANCH_NAME == ('stage') }}

steps {

script {

sh '''

set +x

echo "${replace_SFDX_server_stage_key}" > ./server.key

'''

sh "sfdx force:auth:jwt:grant --clientid ${consumer_key} --username ${replace_user_name} --jwtkeyfile './server.key' --instanceurl ${replace_sfdc_host_sandbox} --setdefaultusername"

def packageFilePath = 'zen_booking/zen_package_booking_stage.csv'

//GET THE FILES CHANGED AS PART OF MERGE REQUEST

def commitHash = sh(returnStdout: true, script: 'git rev-parse HEAD').trim()

def diffCmd = "git diff --name-only ${commitHash} ${commitHash}~1"

def changedFiles = sh(returnStdout: true, script: diffCmd).trim().split('\n')

echo "Changes ${changedFiles} in PR"

//PERFORM DATA LOAD ONLY IF THE FILE HAS BE ALTERED

if (changedFiles.contains(packageFilePath)) {

echo "*****************Data Load Started!****************"

def result = sh script: 'sfdx force:data:bulk:upsert -s Custom_Object__c -f ./foldername/file_name.csv -i External_Id__c --serial', returnStdout: true

echo "$result"

def jobIdPattern = Pattern.compile(/-i\s+(\S+)/)

def batchIdPattern = Pattern.compile(/-b\s+(\S+)/)

def jobIdMatcher = jobIdPattern.matcher(result)

def batchIdMatcher = batchIdPattern.matcher(result)

def jobId = jobIdMatcher.find() ? jobIdMatcher.group(1) : null

def batchId = batchIdMatcher.find() ? batchIdMatcher.group(1) : null

sleep(time: 30, unit: 'SECONDS')

// Set the Salesforce CLI command with the dynamic Job ID and Batch ID

def sfdxCommand = "sfdx force:data:bulk:status -i $jobId -b $batchId"

// Execute the command

def response = sh(returnStdout: true, script: sfdxCommand)

echo "****************Getting the response for the Data Load*************: $response"

}

}

}

}

Note: Replace Custom_Object__c - with the relevant object API ,

foldername/file_name.csv - with the relevant folder name and the file name where the changes are added,

External_Id__c - External Id field to perform the data upsert

Similary add a stage script for Master.

## 2. Clone a feature branch and add csv for data upload:

Clone a feature branch from the Master and add the folder( same as the foldername as mentioned in the above step) and add the required csv files inside the folder (use the same file_names.csv) and Commit the CSV files containing the data

## 3.Monitoring the success and failure logs:

After merging the changes from the Feature Branch to Stage/Master, the next step is to verify the data load status in the Jenkins job. Keep an eye on the printed $response message to ensure everything is running smoothly. In case any failures occur during the data load, don't worry! You can quickly navigate to "monitor bulk upload jobs" in the corresponding Salesforce org to find out the reason for the failure.

Advantages:

Using SFDX Bulk Data Upsert from a Jenkins script offers several advantages for your Salesforce data management workflows:

## 1. Automation and CI/CD Integration:

By incorporating SFDX Bulk Data Upsert into a Jenkins script, you can automate data loading processes as part of your Continuous Integration/Continuous Deployment (CI/CD) pipeline. This ensures consistent and automated data updates during application development and deployment.

## 2. Efficient Data Loading:

Bulk Data Upsert leverages Salesforce Bulk API, which is optimized for processing large volumes of data. With Jenkins, you can schedule and execute data upserts at specific times, enabling efficient data loading without manual intervention.

## 3. Reduced API Usage:

SFDX Bulk Data Upsert consumes fewer API calls compared to traditional data loading methods. This helps you stay within Salesforce API limits and avoid unnecessary API costs.

## 4. Scalability and Performance:

Jenkins allows you to scale data loading processes horizontally by adding more build agents or nodes. This ensures fast and efficient data upserts, even for massive datasets.

## 5. Error Handling and Reporting:

Jenkins provides excellent error handling and reporting capabilities. You can set up notifications and alerts to monitor the data upsert process and quickly respond to any issues that may arise.

## 6. Security and Access Control:

Jenkins offers robust security features, allowing you to control access to data loading scripts and credentials. You can implement secure authentication methods to protect sensitive data.

## 7. Consistency and Reproducibility:

Jenkins ensures that data upserts are executed consistently every time they are triggered. This guarantees reproducibility and eliminates human errors in data loading.

## 8.Scheduling Flexibility:

With Jenkins, you can schedule data upserts to run at specific intervals, during off-peak hours, or based on triggers like code commits or other events. This enhances flexibility and optimization of data loading processes.

In summary, leveraging SFDX Bulk Data Upsert from a Jenkins script offers numerous benefits, including automation, scalability, reduced API usage, error handling, security, and seamless integration with other tools. It simplifies and streamlines your Salesforce data loading workflows while ensuring consistency and efficiency in data management.